So hey! Here you are. After long searches and finger taps, you have come to the perfect place where you are going to get an answer to your question “How To Rank #1 on Google Search Results” and all the sub-quest heading under that one question. How to get your site to Number 1 Rank on Google? Is that what you are looking for?

Well then, welcome to the bible of , perhaps a mini bible where you shall be quenching your thirst of long prevailing questions. We shall be digging deeper, and deeper to get the clarity of the facts. You might have heard of . The sole mantra to seduce the first place on the Google ranks.

Let’s get a little known to it.

What is SEO?

SEO or search engine optimization is the process to make your content or the site or any webpage friendly to the search engine algorithm so that your content may rise to the high ranks of that particular search engine. Now that if we are talking about search engines, there is just one name that comes to the mind. The Googol or; as we say today The Google. Well, this is just a drop from the ocean which I presented above. It is the overview of what is and what role it plays in the Google rankings. befriends the Google Bots and its algorithms to get the higher ranks.

Pufft! A new word again! The Google Bot. Well, no need to worry as I am here to explain. Just sit back and observe what I want you to and embed it in your workings.

What is Googlebot?

Let’s know what the Google Bots and Algorithms are what role they play in ranking Factor. GoogleBot is a web crawler used by Google. It is used to find and retrieve web pages. The information gathered by GoogleBot is used to update the Google Index. GoogleBot visits billions of web pages all over the web. A GoogleBot retrieves the content of web pages, notes if the web pages are linked to some other pages, and sends whatever it collects to Google.

Now you might be wondering that what in God’s green earth this Web Crawler or Google Index is. Well, allow me to define the terms to you. Web Crawlers are a type of software designed to stalk links, gather information and send the information collected to Google. However, the Google Index takes the content received from GoogleBot and uses it to rank pages according to the conditions and algorithms of Google. I can smell the doubt you have with the relation of GoogleBot with your website. Well, in order for your web pages to be found on Google, they must be visited by GoogleBot. And in order for your web page to rank on Google, it must be accessible by GoogleBot.

Basic Introduction to Google Algorithms to let you understand the process of ranking your site on Google

There are various Google Algorithms which help your site get the good ranks on search web. Let’s have a look at them and be informed about what their functions are.

Google Panda

This algorithm analyses the content on the web page and evaluates its rank on the web. It also sees if the work is duplicate, plagiarized or has thin content; checks if it is user-generated spam, and also makes sure that keyword stuffing is up to the limits.

Google Penguin

Backlinks keep the websites intact. Google Penguin checks the backlinks and verifies if it is from a secured source or not. It also looks for the spammy or irrelevant links and the links with over-optimized anchor texts.

Google Pigeon and Google Possum

These 2 algorithms analyze the location wise search and see if the content is capable enough to rank even on the local search results or not. Pigeon work on those searches in which the user’s location plays an important role. The recent update has created nearer ties between local algorithms and the core algorithms i.e. traditional factors are now used to rank local results. Possum helps search a physical place near your location.

Google Hummingbird

Keywords aren’t everything. Maybe the best content isn’t keyword friendly or maybe the best keyword-friendly content isn’t what you are looking for. Google Hummingbird understands the intention of the searcher and brings out the relevant content.

Google RankBrain

It is like the artificial intelligence of Google. It uses its intelligence to rank the content on Google. It evaluates if the content is good enough to go on the first pages of Google.

Google Fred

The world is full of hackers and frauds. But Google knows not to spare them even for a while. And that’s where Google Fred comes to help. It helps detect Black Hat and block the source so that it won’t happen again. Not only this, but it also checks the Google ads and sees if the quality of content is up to the mark or not.

Google Mobile Friendly

Come on! Who isn’t on the mobiles today? Google ought to be there as well. Google strives to make the sites mobile friendly and hence has recently launched the initiation of mobile first as well.

Now let’s come back to the question.

How should we optimize our website to rank it higher?

This is a step by step process wherein you can’t jump or skip. Before starting with optimization, you first need to get a proper analysis or what is there in your website and where does it rank. So, there are many tools which can help you with that. Some of them could be tools like site checkup, iwebchk, Moz etc. These tools would help you know what weaknesses of your site are and how to remove them. There are some other tools as well which would guide you on how to do a good keyword research and how to stuff those keywords in the content. Some of such tools could be Google Adword Keyword Planner, keyword.io etc.

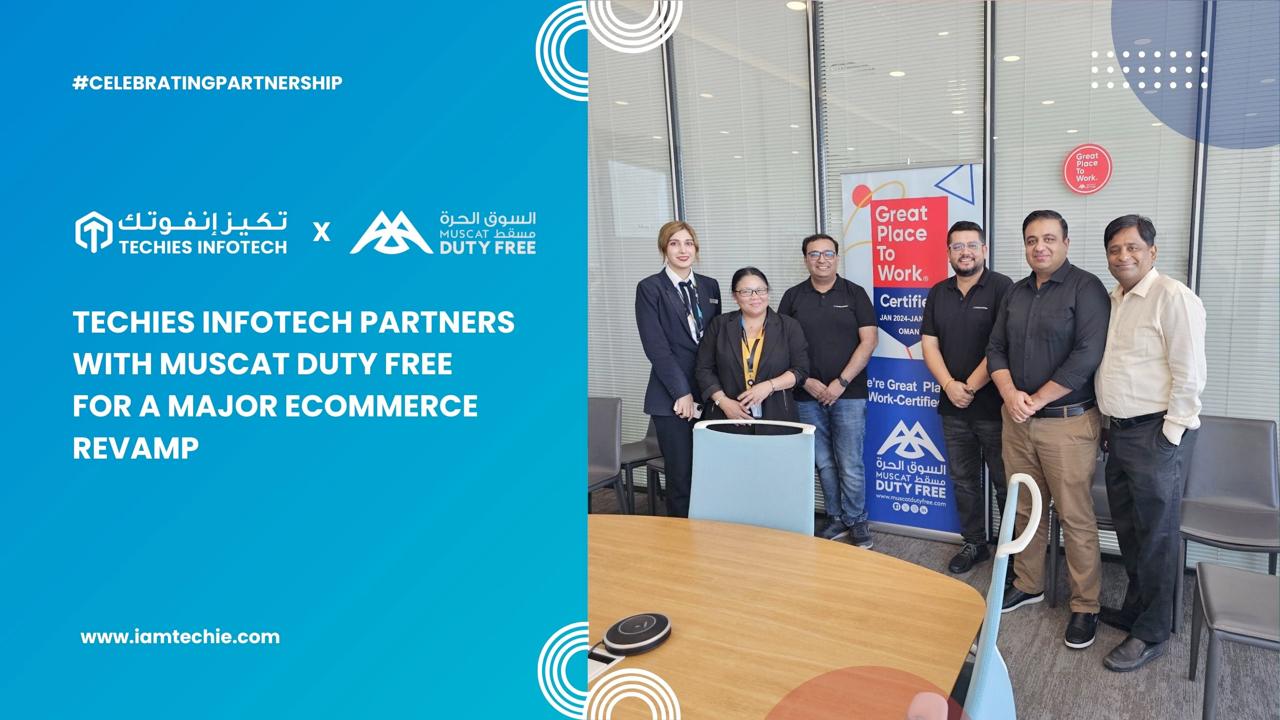

Techies Infotech is the best company in dubai having years of experiences befriending Google algorithms. Hence, it provides a free Analysis for your website as well. Call our experts today and see where do you stand and how good our help can be. After analyzing, the optimization part comes. Let’s start with the On-Page Optimization. In On-Page Optimization, we need to take care of some things.

Meta Tag Optimization

Meta tags are tit-bits of the text used to describe a page’s content. These do not appear on the site but are included in the Google codes. Hence you need to choose the best of the best keywords for your meta tags as these solely would define your webpage.

Meta Title

Your title is the first to come in view of the viewer and the Google itself. Make sure it is stuffed with the keywords. The keywords should be stuffed in such a way that it compels the viewer to get to your site.

Meta Description

The richer your Meta description, the better is your optimization. Keep it in mind that Meta Description should wholesomely be keyword stuffed. The description you are writing should be very much compelling. It should trigger the reflexes of the person to view the site at first glance.

Some more points you need to keep in mind are:

Robots.txt

This extension instructs GoogleBot on which page to crawl and which page to not. Hence, your ranking would wholesomely depend upon the pages you allow GoogleBot to crawl. And for the same, you need to upload your robot.txt file on your site.

Sitemap.xml

It is like a file which stores all the URLs of the site. It helps Google to crawl all the URLs of the site more efficiently. You need to upload a sitemap.xml file on your site to help Google do the same.

Alt tag fixings

Basically, Google doesn’t understand images but it can read the alt text which would explain what the image is all about. Hence, you ought to be careful while you write alt text.

Unique content grammatically correct

Make sure that the content you write is user-friendly, grammatically correct and not too much keyword stuffed.

Making website mobile friendly

With Google’s initiations of Mobile First, algorithms are now giving weight to the sites which are mobile friendly.

Canonicalization Fixing

The world is going fast and seeks the shortcuts. People want to search your site just with your name. They don’t want to waste their time over HTTP// or www. Here is where canonicalization helps. It optimizes your site to be reachable even without adding these words and would still redirect you to the same URL and Google won’t consider it to be a duplicate page. Through canonicalization, Google would consider a URL with www, HTTP or both or none as a single page. If we use canonicalization fixing, we use this code in .htaccess file of the site:

RewriteEngine On

RewriteCond %{HTTP_HOST}^www.example.com$

RewriteRule ^/?$ “http://example.com/” [R=301,L]

Correct Website URL structure

Google likes everything clean and at a place. It wants a clear path to where your page is. No stories; nothing at all. Hence you need to carefully write your web address for various web pages. The URL structure needs to be understandable.

Make your website load Fast

Viewers too like only those sites which are fast to load, and Google prioritizes the customer experience. Analyze the speed of your web page with Google Speed Check and follow the rules to make it faster. As faster the site, better the customer experience and better is the ranking.

Optimize images

As told before, Google can’t read the images, but can surely read the alt text provided along. Hence optimize the alt tags wisely and it would befriend the Google Algorithms. Also, compress the size of the image so that it is easy to load and increase the website speed.

Increase Site Speeds

Minify JavaScript, CSS, and HTML – By reducing the code sizes and by combining all the codes, you can make your page load faster. This further means high preferability by customers and by Google.

Use gzip compression – Increase or decrease the size of .htaccess code page so that loading speed reduces. Through this, the size of the page gets reduced by almost 70%. This, in turn, would increase the customer preferability and further your page might come in the good books of Google.

The code used in gzip compression is given below. One needs to copy such code in .htaccess file.

<ifModule mod_gzip.c>

mod_gzip_on Yes

mod_gzip_dechunk Yes

mod_gzip_item_include file .(html?|txt|css|js|php|pl)$

mod_gzip_item_include handler ^cgi-script$

mod_gzip_item_include mime ^text/.*

mod_gzip_item_include mime ^application/x-javascript.*

mod_gzip_item_exclude mime ^image/.*

mod_gzip_item_exclude rspheader ^Content-Encoding:.gzip.

</ifModule>

Page Cache – Make your page in such a way that it can store the offline cache so that when a viewer hits the back button, he can view the same content eliminating the loading time.This can be done through the following code placed in .htaccess file.

<ifModule mod_headers.c>

# YEAR

<FilesMatch “.(ico|gif|jpg|jpeg|png|flv|pdf)$”>

Header set Cache-Control “max-age=29030400”

</FilesMatch># WEEK

<FilesMatch “.(js|css|swf)$”>

Header set Cache-Control “max-age=604800”

</FilesMatch># 45 MIN

<FilesMatch “.(html|htm|txt)$”>

Header set Cache-Control “max-age=2700”

</FilesMatch>

</ifModule>

Header Expiration Session – Usually when the page is refreshed, its header expires. Optimizing header expiration session would help to increase the expiration limit to some extent of time. Following code is used in this regard. (in .htaccess file)

# BEGIN Expire headers

<ifModule mod_expires.c>

ExpiresActive On

ExpiresDefault “access plus 5 seconds”

ExpiresByType image/x-icon “access plus 2592000 seconds”

ExpiresByType image/jpeg “access plus 2592000 seconds”

ExpiresByType image/png “access plus 2592000 seconds”

ExpiresByType image/gif “access plus 2592000 seconds”

ExpiresByType application/x-shockwave-flash “access plus 2592000 seconds”

ExpiresByType text/css “access plus 604800 seconds”

ExpiresByType text/javascript “access plus 216000 seconds”

ExpiresByType application/javascript “access plus 216000 seconds”

ExpiresByType application/x-javascript “access plus 216000 seconds”

ExpiresByType text/html “access plus 600 seconds”

ExpiresByType application/xhtml+xml “access plus 600 seconds”

</ifModule>

# END Expire headers

Implement Rich Cards in your Website

The better the design of the site, the more is the customer involvement. Use the rich cards to give some extra designs to the business page of Google and make your business more compelling. Not only this, but it also improves the business appearance on Google.

Breadcrumbs Rich Card

This word might look familiar to you. Basically, breadcrumbs help Google know the path of the content easily. This further helps for better site navigation.

Local Business Page Rich Card

You can change your appearance on the local business page using the rich cards and can even link your social media handles over it.

Reviews Rich Card

Reviews can be embedded in the rich cards to show how good the business is doing. You can show your reviews through ranking in between 1 to 5.

Products or Services Rich Card

Add all your products and services along with the images to embed it in rich cards. So, when a customer searches for the product or service you provide, your rich cards would be shown on top of the google search results.

Article / Blog Rich Card

Keep uploading regular articles to your site. This would improve the keyword growth and would increase your involvement on the web. Try answering most asked questions through your blogs or articles. This would help in the long run. These too can be used as rich cards to show in the top of google search results.

Backlinking

Include Backlinking to your site. This would make the site intact and may help people know in addition to what they are looking for. Now, we know that what should be done to get the site to top. However, there are some things which you should avoid doing. If you pursue these things, Google might not like it and instead of taking you to high growths, may just ban or block you from the web. Let’s see what these things are:

Black Hat

Black Hat or Hacked is what Google hates. If Google gets to know that there is something fishy about the it might just ban your site from even showing on the web.

Hidden Content

Google doesn’t like anything hidden. You ought to disclose everything else Google might create a problem for you.

Keyword Stuffing

Stuff the keywords in such a way that they do not seem to be forced into the content. There is a limit to use a particular keyword. You ought to stay in those limits while stuffing.

Cloaking and Doorway Pages

This is a type of Black Head wherein Google is shown some other page but when it is clicked, the user gets directed to some other page. This can affect your site and Google may even block it or put it in spam.

Techies Infotech is the best agency in Australia which can help you with Google Rankings. With almost a decade of experience, we have helped our global clientele climb the stairs of Google Rank 1. We have the skilled personnel to help you with your site-building and optimization. Reach us now and we shall together have a voyage to the higher levels.